Quick, name the one technology that is hotter that Virtual Reality or Machine Learning today. Yep... the hottest thing right now is crypto currency. But did you miss out on the gravy train?

Well, judging from the dizzying levels of the two heavy weights in the crypto world, Bitcoin and Ethereum you could think so.

At the time of writing, the price of bitcoin went from ¢24 to $17666,- in 8 years, which is roughly a factor 74,000.

It's nigh impossible for bitcoin to repeat that in the next eight years, as there is no room for a $1.3B bitcoin.

Bitcoin got its position as top dog by virtue of being first on the scene. Ethereum got there by the strength of its features, as its blockchain offers a mechanism to write distributed applications and write smart contracts. It is vastly more versatile and more utilitarian than Bitcoin will ever be. For instance, you can use it to implement a cross-breeding, trading platform for virtual kitties with guaranteed pedigree. Sure, it's silly, but it does allow for, among other things, creating cryptographically secured scarce virtual objects.

So Ethereum for the win, then? Well, maybe not. Because of the meteoric rise in the price of Ether (the coin for Ethereum) developers of distributed apps may think twice about running their code on the Ethereum Virtual Machine. When an app is running, it is consuming precious gas. The the price for this gas will quickly become prohibitively expensive.

So if we discount Bitcoin and Ethereum as viable candidates for getting in late, what's left? With over a 1000 to chose from, is there one with a bright future, capable of rising to the top and displacing both Bitcoin and Ethereum? Spoiler: yes there is.

There is an interesting newcomer by the name "NEO." I've seen it described as "China's Ether." I came across it reading a thread about Ethereum killers.

So from what I've been able to ascertain, is that the NEO coin is not mined. If you want NEO, you have to buy it. However, the nice thing about NEO is that as you hold it in your crypto wallet, it generates GAS. Yep, the GAS that is used for running the distributed apps similarly, as Ethereum executes apps. An interesting aspect, right there: gas gets generated by the coins, which means you do not have to spend your precious and rapidly appreciating crypto coin to use the Virtual Machine.

Another possible contender from the aforementioned thread is EOS, by the way. A commenter described it as: "If bitcoin is currency, and ethereum is gas, EOS is land". So that may be worth looking into.

So for the sake of argument, let's say we want to hedge out bets, and get some of those dividend yielding NEO coins. How would you purchase them? Well, they are best purchased using another crypto coin like Bitcoin or Ether. If you don't have those, I suggest you head over to the nearest bitcoin ATM in your city.

With bitcoins in your wallet, it is now time to purchase NEO on an exchange. I recommend Binance (referral link) which has been excellent for me. It has some amazing advantages that other exchanges do not have:

- No verification needed for below 2btc withdrawals.

- After signing up you can fund and trade immediately.

- No fee for withdrawal of NEO.

- Great trading interface.

- Easy to use.

- Based in stable Japan/HongKong without much government interference.

I personally learned this too late, but you do not want to end up with fractional NEO coins. Buying 10.0 or 10.01 NEO is fine. But if you end up with 10.99 NEO, then you can only transfer out the whole coins, and have a less useful 0.99 NEO left over.

With the NEO coins in your Binance account, you can withdraw those for free to a wallet that you created yourself, on your own computer. I recommend the Neon Wallet. Before you withdraw from Binance to your own wallet, make absolutely sure you printed out the private key of your newly created wallet, on paper. And store it in a safe place. Lose your key, and you will lose your coins.

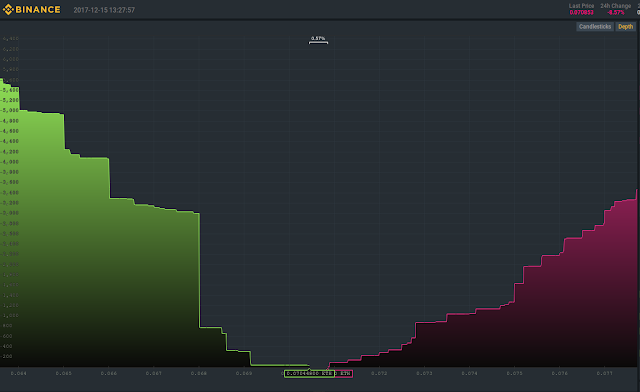

Let me conclude by showing Binance's nifty graph that in real time shows you the volume of buyers and sellers at all price points.